News

- 2022.05.17: Our paper is accepted by IJCV!

- 2022.05.17: The 2nd Occluded Video Instance Segmentation Challenge is held in ECCV 2022 Workshop on Multiple Object Tracking and Segmentation in Complex Environments. Call for papers!

- 2021.11.14: The code for our CMaskTrack R-CNN and metrics has been published on Github!

- 2021.10.10: The paper that introduces our dataset and the ICCV 2021 challenge is accepted by NeurIPS 2021 Datasets and Benchmarks Track!

- 2021.06.01: The Challenge hosted by our workshop has started. Call for challenge participation!

- 2021.06.01: The 1st Occluded Video Instance Segmentation Workshop will be hold in conjunction with ICCV 2021. Call for Workshop Paper Submissions!

Overview

Abstract

Can our video understanding systems perceive objects when a heavy occlusion exists in a scene?

To answer this question, we collect a large-scale dataset called OVIS for occluded video instance segmentation, that is, to simultaneously detect, segment, and track instances in occluded scenes. OVIS consists of 296k high-quality instance masks from 25 semantic categories, where object occlusions usually occur. While our human vision systems can understand those occluded instances by contextual reasoning and association, our experiments suggest that current video understanding systems are not satisfying.

The difficulty of precisely localizing and reasoning heavily occluded objects in videos reveals that current deep learning models perform differently with the human vision system, and confirms that it is urgent to design new paradigms for video understanding.

For more details, please refer to our paper.

OVIS consists of:

- 296k high-quality instance masks

- 25 commonly seen semantic categories

- 901 videos with severe object occlusions

- 5,223 unique instances

Given a video, all the objects belonging to the pre-defined category set are exhaustively annotated. All the videos are annotated per 5 frames.

Distinctive Properties

- Severe occlusions. The most distinctive property of our OVIS dataset is that a large portion of objects is under various types of severe occlusions caused by different factors.

- Long videos. The average video duration and the average instance duration of OVIS are 12.77s and 10.05s respectively.

- Crowded scenes. On average, there are 5.80 instances per video and 4.72 objects per frame.

Categories

The 25 semantic categories in OVIS are Person, Bird, Cat, Dog, Horse, Sheep, Cow, Elephant, Bear, Zebra, Giraffe, Poultry, Giant panda, Lizard, Parrot, Monkey, Rabbit, Tiger, Fish, Turtle, Bicycle, Motorcycle, Airplane, Boat, and Vehicle.

For more details, please refer to our paper.

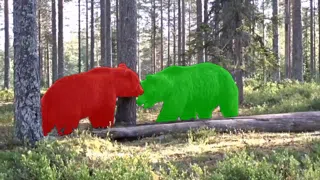

Visualization

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Download

Data

We provide the frames and annotations.- Frames: JPG format. The total size is 12.7GB.

- Annotations: JSON format.

Dataset Download

Evaluation Server (Old Evaluation Servers: 1, 2, 3)

Code

The code and models of the baseline method are released on github.

The code of our evaluation metric is also provided on github

Citation

If the OVIS dataset helps your research, please cite:title={Occluded Video Instance Segmentation: A Benchmark},

author={Jiyang Qi and Yan Gao and Yao Hu and Xinggang Wang and Xiaoyu Liu and Xiang Bai and Serge Belongie and Alan Yuille and Philip Torr and Song Bai},

journal={International Journal of Computer Vision},

year={2022},

}